[AINews] How To Scale Your Model, by DeepMind • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Subreddit Recap

Artificial Intelligence and Model Discussions

Latent Space Discord

OpenRouter (Alex Atallah) Discord

Discussion on Aider (Paul Gauthier) - General Messages and Questions

OpenAI, Google, and SoftBank Partnerships and Updates

Discussions on AI Models, Robotics, and Hardware Ambitions

Exploring GPU Mode Discussions

Performance Metrics and Insights

Mojo Insights

Discord Channel Interactions

AI Twitter Recap

AI Twitter Recap

AI Model Releases and Research Papers

-

"ASAP": A Real2Sim2Real Model for Humanoid Robotics: @DrJimFan announced "ASAP," a model that enables humanoid robots to perform fluid motions inspired by Cristiano Ronaldo, LeBron James, and Kobe Bryant. The team, including @TairanHe99 and @GuanyaShi, has open-sourced the paper and code for this project. The approach combines real-world data with simulation to overcome the "sim2real" gap in robotics.

-

"Rethinking Mixture-of-Agents" Paper and "Self-MoA" Method: @omarsar0 discussed a new paper titled "Rethinking Mixture-of-Agents," which questions the benefits of mixing different LLMs. The proposed "Self-MoA" method leverages in-model diversity by aggregating outputs from the top-performing LLM, outperforming traditional MoA approaches. The paper can be found here.

-

Training LLMs with GRPO Algorithm from DeepSeek: @LiorOnAI highlighted a new notebook that demonstrates training a reasoning LLM using the GRPO algorithm from DeepSeek. In less than 2 hours, you can transform a model like Qwen 0.5 (500 million parameters) into a math reasoning machine. Link to notebook.

-

Bias in LLMs Used as Judges: @_philschmid shared insights from the paper "Preference Leakage: A Contamination Problem in LLM-as-a-Judge," revealing that LLMs can be significantly biased when used for synthetic data generation and evaluation. The study emphasizes the need for multiple independent judges and human evaluations to mitigate bias. Paper.

-

mlx-rs: Rust Library for Machine Learning: @awnihannun introduced mlx-rs, a Rust library that includes examples of text generation with Mistral and MNIST training. This is a valuable resource for those interested in Rust and machine learning. Check it out.

AI Tools and Platforms Announcements

-

Hugging Face's AI App Store Launched: @ClementDelangue announced that Hugging Face has launched its AI app store with 400,000 total apps, including 2,000 new apps daily and 2.5 million weekly visits. Users can now search through apps using AI or categories, emphasizing that "the future of AI will be distributed." Explore the app store.

-

AI App Store Announcement: @_akhaliq echoed the excitement about the launch of the AI App Store, stating it's the best place to find the AI apps you need, with approximately 400k apps available. Developers can build apps, and users can discover new ones using AI search. Check it out.

-

Updates to 1-800-CHATGPT on WhatsApp: @kevinweil announced new features for 1-800-CHATGPT on WhatsApp:

- You can now upload images when asking a question.

- Use voice messages to communicate with ChatGPT.

- Soon, you'll be able to link your ChatGPT account (free, plus, pro) for higher rate limits. Learn more.

-

Replit's New Mobile App and AI Agent: @hwchase17 shared that Replit launched a new mobile app and made their AI agent free to try. The rapid development of Replit's AI Agent is notable, and @amasad confirmed the release. Details here.

-

ChatGPT Edu Rolled Out at California State University: @gdb reported that California State University is becoming the first AI-powered university system, with ChatGPT Edu being rolled out to 460,000 students and over 63,000 staff and faculty. Read more.

AI Events, Conferences, and Hiring

-

AI Dev 25 Conference Announced: @AndrewYNg announced AI Dev 25, a conference for AI developers happening on Pi Day (3/14/2025) in San Francisco. The event aims to create a vendor-neutral meeting for AI developers, featuring over 400 developers gathering to build, share ideas, and network. Learn more and register.

-

Hiring for Alignment Science Team at Anthropic: @sleepinyourhat mentioned the hiring opportunity for the Alignment Science Team at Anthropic.

AI Subreddit Recap

Theme 1. DeepSeek R1 & R1-Zero: Rapid Model Training Achievements

- DeepSeek's researcher claims that R1 and R1-Zero models were trained in just 2-3 weeks, highlighting skepticism and discussions on model training speed and potential bottlenecks. Users compare Deepseek's models to other advancements and express preferences.

- Theme 2. DeepSeek-R1 Model: Implications of Shorter Correct Answers

- DeepSeek-R1's correct answers are generally shorter, with discussions on task difficulty, model behavior, and related research. Users analyze the variations in token lengths for correct and incorrect answers.

- Theme 3. OpenAI Research: Embracing Open-Source via Hugging Face

- Hugging Face launches OpenAI Deep Research, promoting open-source deep research in AI. Users appreciate the move and discuss the implications for the AI community.

Theme 1. OmniHuman-1: China's Multimodal Marvel

- OmniHuman-1 project focuses on video generation from single images, sparking discussions on media authenticity, AI's impact on creative industries, and technical observations and challenges.

- Theme 2. Huawei's Ascend 910C Challenges Nvidia H100

- Huawei's Ascend 910C chip matches Nvidia's H100 in performance, with discussions on CUDA's dominance, Huawei's competitive position, and market dynamics and open source in AI development.

- Theme 3. O3 Mini: OpenAI's Usability Leap

- O3 Mini model impresses users initially but faces challenges in providing effective solutions. Users discuss the generalization of AI models across tasks and the reliability of different AI models like Claude series.

- Theme 4. OpenAI Unveils OpenAI Sans Font

- OpenAI introduces a new font as part of branding strategy, leading to comparisons with Apple's design ethos and discussions on branding, innovation, and audience reception.

Artificial Intelligence and Model Discussions

This section discusses various AI models and tools preferred by the community. Users are exploring options like OpenRouter over Direct API for better uptime and provider prioritization. Automation in Aider file management is sought, and there are queries about Aider chat modes. The section also covers users' reactions to Cursor IDE updates and alternatives like Supermaven. Concerns about the cost of AI tools like Cursor and GitHub Copilot are raised. Deepseek models, including Deepseek R1 600B and anthropic classifiers, are evaluated for performance. The discussion extends to ethical considerations in AI training data and the challenges posed by copyright laws. Users debate the concept of 'hallucination' in LLM outputs and share insights on generating mini-games with O1 Pro. Additionally, LM Studio's support limitations and enhancements with RAG are highlighted. The section also touches on users' skepticism about M4 Ultra's performance, the community's mobilization around Cursor, and the potential impacts of OpenAI's hardware market entry.

Latent Space Discord

Anthropic Challenges Users with Claude Constitutional Classifiers: Anthropic launched their Claude Constitutional Classifiers, inviting users to attempt jailbreaks at 8 difficulty levels to test new safety techniques preparing for powerful AI systems. The release includes a demo app designed to evaluate and refine these safety measures against potential vulnerabilities.

FAIR's Internal Conflicts Spark Debate Over Zetta and Llama: Discussions on social media highlighted internal dynamics at FAIR concerning the development of Zetta and Llama models, specifically around transparency and competitive practices. Key figures like Yann LeCun suggested that smaller, more agile teams have innovated beyond larger projects, prompting calls for a deeper examination of FAIR's organizational culture.

Icon Automates Ad Creation: Icon, blending ChatGPT with CapCut functionalities, was introduced to automate ad creation for brands, with the capability to produce 300 ads monthly. Supported by investors from OpenAI, Pika, and Cognition, Icon integrates video tagging, script generation, and editing tools to enhance ad quality while significantly reducing expenses.

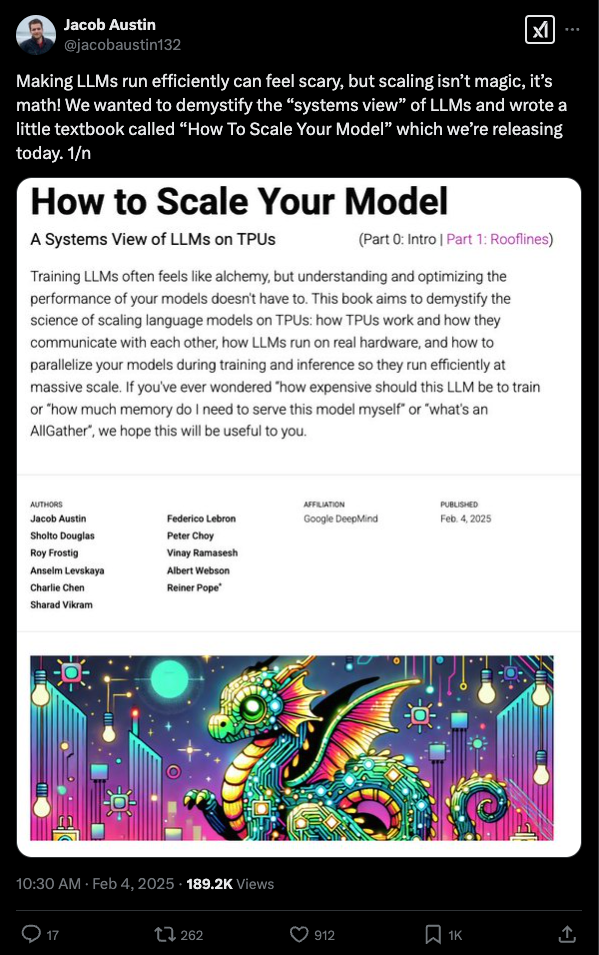

DeepMind Drops Textbook on Scaling LLMs: Google DeepMind released a textbook titled How To Scale Your Model, available at jax-ml.github.io/scaling-book, demystifying the systems view of LLMs with a focus on mathematical approaches. The book emphasizes understanding model performance through simple equations, aiming to improve the efficiency of running large models and using JAX software stack + Google's TPU hardware platforms.

Pi0 Unleashes Autonomous Robotic Actions via Natural Language: Physical Intelligence team launched Pi0, an advanced Vision Language Action model that uses natural language commands to enable autonomous actions, now available on LeRobotHF. Alongside the model, pre-trained checkpoints and code have been released, facilitating fine-tuning on diverse robotic tasks.

OpenRouter (Alex Atallah) Discord

Cloudflare has officially partnered with OpenRouter, integrating its Workers AI platform and Gemma models to offer a variety of open-source tools for developers in AI applications. Gemma 7B-IT model, now on Cloudflare, includes tool calling capabilities for enhanced development efficiency. Llama models have been introduced on OpenRouter, providing users with various options for their projects. A specific Llama model can be requested by AI Developers via Discord. Model error display improvements now show the model name in error messages for better user clarity.

Discussion on Aider (Paul Gauthier) - General Messages and Questions

The section provides insights into various discussions happening in the Aider (Paul Gauthier) channel. It covers topics like the performance improvements observed with O1 Pro, debates on weak models in Aider, discussions on OpenRouter vs. Direct API, interest in using shell tools for LLM interaction, and challenges with testing and refactoring. The section also mentions user requests for additional shortcuts in Windsurf and Server Installation issues on 32-bit ARM devices. Additionally, it includes links to related resources and GitHub projects, enhancing understanding of the ongoing discussions.

OpenAI, Google, and SoftBank Partnerships and Updates

This section discusses notable partnerships and updates in the AI field involving OpenAI, Google, and SoftBank. Highlights include SoftBank's $3 billion investment in OpenAI products, Google integrating AI capabilities into Google Workspace, the introduction of harmonic loss for neural networks as an alternative to cross-entropy loss, the launch of the MultiChallenge benchmark for evaluating large language models, and the design overhaul of the OpenAI website. These developments signify significant advancements and collaborations shaping the landscape of AI technology.

Discussions on AI Models, Robotics, and Hardware Ambitions

This section delves into various discussions within the AI community, covering topics such as controversial stances by prominent figures like Noam Brown, internal rivalry within teams like Zetta and Llama-1, OpenAI's hardware expansion, concerns about internal communication, and collaborative breakthroughs in robotics. Additionally, conversations around optimizing AI models, hardware challenges, and policy considerations like mandatory licensing and fair use for AI are also highlighted. The details provide insights into the ongoing developments, tensions, and innovations shaping the AI landscape.

Exploring GPU Mode Discussions

The GPU Mode discussions cover various topics such as the FP8 Attention's impact on output quality, the explanation of quantization's effect on attention, and discussions on the impact of linear layers in output differences due to quantization. There are also mentions of job opportunities for a Staff Software Engineer focusing on ML Performance & Systems, along with a call to star a project on model compression. Additionally, discussions touch on CUDA kernel optimization projects, errors in fused attention tutorials, and conversations about Github copilot and Cursor tools. The section also includes a link to watch an iron_bound video and job postings for a Staff Software Engineer role.

Performance Metrics and Insights

- Low TPS metrics for a 1.4B model: The user achieved close to 14K TPS for a 1.4B model on 8x80GB A100s but considered this metric quite low. Concerns were expressed about further optimization possibilities.

- GAS vs. Activation Checkpointing for improved TPS: Training without activation checkpointing while using GAS for increased effective batch size resulted in significantly higher TPS, with 242K TPS vs 202K TPS. Questions were raised about monitoring HFU/MFU metrics.

- Model configuration insights shared: The user provided a GitHub configuration link for a baseline speed experiment involving model parallel autoregressive transformers. This shared configuration serves as a resource for improving model training setups.

Mojo Insights

In the Mojo 🔥 channel, discussions around hot reloading mechanisms in Rust using a C ABI, Rust's #[cfg(feature = "foo")] feature in Mojo, evaluation of Python's asyncio event loop, challenges of thread safety in asynchronous APIs, and memory allocation management in futures were highlighted. Members shared insights on the need for a stable ABI for compatibility, compared Mojo's threading and memory management with Python's asyncio, and discussed the importance of memory allocation management in optimizing performance. The community also mentioned using the uvloop library on GitHub for efficient asyncio event loops.

Discord Channel Interactions

This section highlights various interactions and discussions that took place in Discord channels related to AI projects. It includes conversations about model surprises, Canadian AI efforts amidst US tariffs, a bug affecting functionality, a webinar on financial semantic search, interactions with a bot, survey on tech content preferences, AI regulation in the EU, shipping inquiries for a product, challenges in Iceberg management, discussions on LLMs vs. traditional ML, feedback on the Open Interpreter project, updates on Gemma models, and improved error feedback mechanisms.

FAQ

Q: What are some recent AI model releases and research papers discussed in the essai?

A: Some recent AI model releases and research papers discussed in the essai include ASAP: A Real2Sim2Real Model for Humanoid Robotics, Rethinking Mixture-of-Agents paper and Self-MoA method, Training LLMs with GRPO Algorithm from DeepSeek, Bias in LLMs Used as Judges paper, mlx-rs Rust library for machine learning, Hugging Face's AI App Store launch, updates to 1-800-CHATGPT on WhatsApp, Replit's new mobile app and AI agent, and ChatGPT Edu rollout at California State University.

Q: What are some themes related to AI models and tools discussed in the essai?

A: Some themes related to AI models and tools discussed in the essai include DeepSeek R1 and R1-Zero: rapid model training achievements, DeepSeek-R1 model implications, OpenAI research embracing open-source via Hugging Face, OmniHuman-1 focusing on video generation from single images, Huawei's Ascend 910C challenging Nvidia H100, O3 Mini from OpenAI facing usability challenges, and OpenAI unveiling OpenAI Sans font.

Q: What are some notable AI partnerships and updates mentioned in the essai?

A: Some notable AI partnerships and updates mentioned in the essai include SoftBank's $3 billion investment in OpenAI products, Google integrating AI capabilities into Google Workspace, the introduction of harmonic loss for neural networks, the launch of the MultiChallenge benchmark for evaluating large language models, the design overhaul of the OpenAI website, and partnerships between Cloudflare and OpenRouter, Gemma models, and other AI tools.

Q: What are some specific user discussions regarding AI models and tools in the essai?

A: Specific user discussions regarding AI models and tools in the essai include concerns about cost of AI tools like Cursor and GitHub Copilot, performance evaluations of DeepSeek models and anthropic classifiers, ethical considerations in AI training data, discussions on 'hallucination' in LLM outputs, insights on generating mini-games with O1 Pro, LM Studio's support limitations, enhancements with RAG, skepticism about M4 Ultra's performance, community mobilization around Cursor, and potential impacts of OpenAI's hardware market entry.

Q: What are some technical discussions related to AI models and tools mentioned in the essai?

A: Some technical discussions related to AI models and tools mentioned in the essai include discussions on FP8 Attention's impact on output quality, quantization's effect on attention, linear layers' impact on output differences due to quantization, job opportunities for Staff Software Engineer focusing on ML Performance & Systems, model compression projects, CUDA kernel optimization projects, errors in fused attention tutorials, and conversations about Github copilot and Cursor tools.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!